In August 2021, Apple revealed its plans to scan iPhones for images of child sexual abuse. The move drew applause from child protection groups but raised concerns among privacy and security experts that the feature could be misused.

Apple initially planned to include the Child Sexual Abuse Material (CSAM) scanning technology in iOS 15; it has instead indefinitely delayed the feature's rollout to solicit feedback before its full release.

So why did the CSAM detection feature become a subject of heated debate, and what made Apple postpone its rollout?

What Does Apple's Photo-Scanning Feature Do?

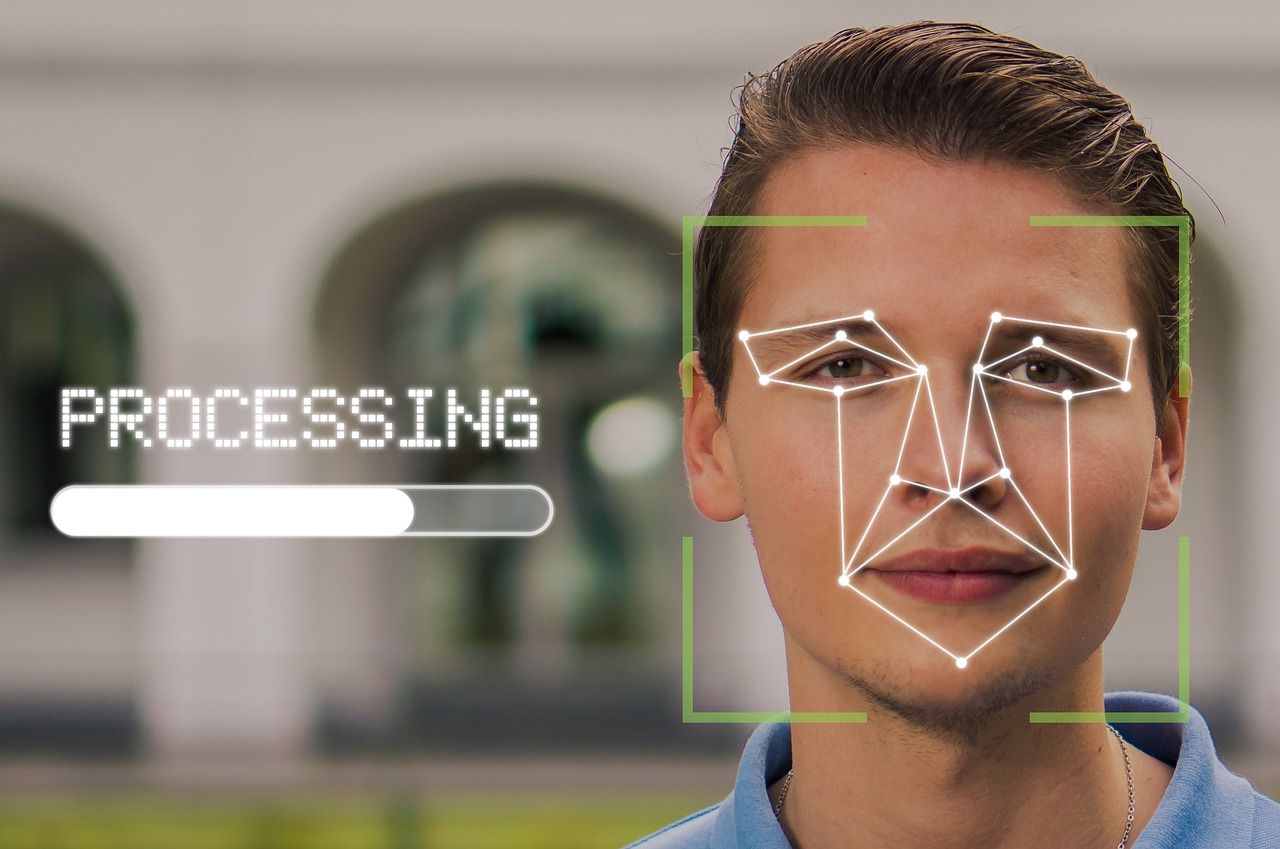

Apple announced to implement the photo-scanning feature in hopes to combat child sexual abuse. All the photos in the devices of Apple users will be scanned for pedophiliac content using the "NueralHash" algorithm created by Apple.

In addition, any Apple devices used by children would have a safety feature that will automatically blur adult pictures if received by a child, and the user would be warned twice if they tried to open them.

Apart from minimizing exposure to adult content, if parents register the devices owned by their children for additional safety, the parents would be notified in case the child receives explicit content from anyone online.

As for adults using Siri to look for anything that sexualizes children, Siri won't make that search and suggest other alternatives instead.

Data from any device containing 10 or more photos deemed suspicious by the algorithms will be decrypted and subjected to human review.

If those photos or any other in the device turn out to match anything from the database provided by National Center for Missing and Exploited Children, it will be reported to authorities and the user's account will be suspended.

Main Concerns Regarding the Photo-Scanning Feature

The CSAM detection feature would have gone live with the launch of iOS 15 in September 2021, but in the face of widespread outcry, Apple decided to take more time to collect feedback and make improvements to this feature. Here is Apple's full statement on the delay:

"Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material.

Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features".

Nearly half of the concerns related to Apple's photo-scanning feature revolve around privacy; the rest of the arguments include the probable inaccuracy of algorithms and potential misuse of the system or its loopholes.

Let's break it down into four parts.

Potential Misuse

Knowing that any material matching child pornography or known images of child sexual abuse will get a device into the "suspicious" list can set cybercriminals into motion.

They can intentionally bombard a person with inappropriate content through iMessage, WhatsApp, or any other means and get that person's account suspended.

Apple has assured that users can file an appeal in case their accounts have been suspended due to a misunderstanding.

Insider Abuse

Although designed for a benevolent cause, this feature can turn into a total disaster for certain people if their devices are registered into the system, with or without their knowledge, by relatives interested in monitoring their communication.

Even if that doesn't happen, Apple has created a backdoor to make users' data accessible at the end of the day. Now it's a matter of motivation and determination for people to access other people's personal information.

It doesn't only facilitate a major breach of privacy, but also paves the way for abusive, toxic, or controlling relatives, guardians, friends, lovers, care-takers, and exes to further invade someone's personal space or restrict their liberty.

On the one hand, it's meant to combat child sexual abuse; on the other, it can be used to further perpetuate other kinds of abuse.

Government Surveillance

Apple has always touted itself as a more privacy-conscious brand than its competitors. But now, it might be entering a slippery slope of having to fulfill the never-ending demands of transparency in user data by governments.

The system it has created to detect pedophiliac content can be used to detect any sort of content on phones. That means governments with a cult mentality can monitor users on a more personal level if they get their hands on it.

Oppressive or not, government involvement in your daily and personal life can be unnerving, and is an invasion of your privacy. The idea that you only need to be worried about such invasions if you've done something wrong is flawed thinking, and fails to see the aforementioned slippery slope.

False Alarms

One of the biggest concerns of using algorithms to match pictures with the database is false alarms. Hashing algorithms can mistakenly identify two photos as matches even when they aren't the same. These errors, called "collisions," are especially alarming in the context of child sexual abuse content.

Researchers found several collisions in "NeuralHash" after Apple announced it would use the algorithm for scanning images. Apple answered queries about false alarms by pointing out that the result will be reviewed by a human at the end, so people need not worry about it.

Is Apple's CSAM Pause Permanent?

There are many pros and cons of Apple's proposed feature. Each one of them is genuine and holds weight. It's still unclear what specific changes Apple could introduce to the CSAM-scanning feature to satisfy its critics.

It could limit the scanning to shared iCloud albums instead of involving the users' devices. It's very unlikely for Apple to drop these plans altogether since the company isn't typically inclined to give in on its plans.

However, it's clear from the widespread backlash and Apple's holding off on its plans, that companies should incorporate the research community from the beginning, especially for an untested technology.

0 Comments